In this article, we will learn how to add leading zero to columns in spark dataframe using PySpark. We will use databricks to run this demo program to understand the ways available in Apache Spark to add preceding zero in Spark.

Problem Statement:

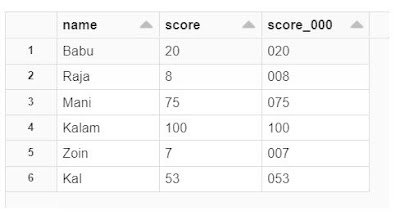

Consider we have a input Spark dataframe as shown in the below figure with the couple of column Name and Score.

Our requirement is to,

- Add Leading Zero to the column Score and make it column of three digit as shown in the below output Spark dataframe.

convert 8 into 008

Create Input DataFrame From List:

We can get the input dataframe with the help of piece of code given below. we create a list with two columns and convert the List into Spark dataframe.

#Create input Spark Dataframe

list_data=[["Babu",20],["Raja",8],["Mani",75],["Kalam",100],["Zoin",7],["Kal",53]]

df1=spark.createDataFrame(list_data,["name","score"])

df1.show()

Out[]:

Solution;

Apache Spark offers us with the three different approach to handle this scenario. One can use any of the below method as per their business requirement and get the required output.

- Using Format String

- Using lpad

- Using Concat and Substring

Let us see implement each and every method one after the another.

Method 1 - Using Format String:

Format String basically formats the given value in the printf-style and returns the resultant value as a string output. One can import the Spark SQL function format_string and use it to add the leading zeros or for zero padding to the column in Spark dataframe.

General Syntax:

format_string(format,*cols)

Parameters:

- format (str): string of embedded format tags like %d %s used to format result column value

- cols (column or str): Either a single column or multiple columns in Spark dataframe to be formatted

Coming back to our use-case, let us see the code snippet to solve our problem statement.

Code Snippet using format_string:

#format_stringfrom pyspark.sql.functions import format_stringdf2=df1.withColumn("score_000",format_string("%03d","score"))df2.show()

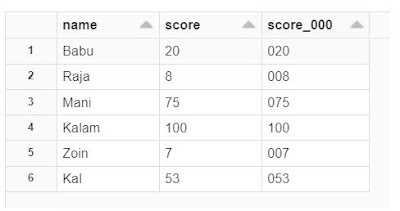

Out[]:

Method 2 - Using LPad Function:

First option that comes into a mind of SQL expert to deal with padding is LPAD or RPAD which is nothing but left padding or right padding. LPAD simply pads a string column to the with of the specified length.

General Syntax:

lpad(col,len,pad)

Parameter:

- col - Column or string to be padded

- len - Length of the resultant column after applying padding

- pad - character to pad (like 0 or # etc.,)

Code Snippet using Lpad:

#lpad

from pyspark.sql.functions import lpad

df2=df1.withColumn("score_000",lpad("score",3,"0"))

df2.display()

Out[]:

Method 3 - Using Concat and SubString:

This method is not used in scenario like this, but including here to just show the option available to use without using lpad or format_string. Unlike other mehods, here we use couple of PySpark Sql function to achieve the output that we need. Firstly, we apply concat function on the score column and apply substring to get the final result as we require.

Code Snippet using Concat and SubString:

#concat & substring

from pyspark.sql.functions import concat,substring,lit

df2=df1.withColumn("score_000",concat(lit("00"),"score"))

df3=df2.withColumn("score_000",substring("score_000",-3,3))

df3.display()

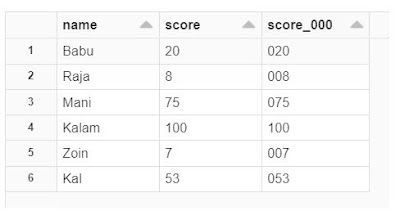

Out[]:

Attachments:

One can find the python notebook that we used above in the below link.

Hope you guys learnt to solve the scenario based problem statement in Apache Spark. Try this in your own Spark setup and let me know through comments if you face any issues in understanding the execution.

Happy Learnings !!!

Reference:

- https://spark.apache.org/docs/3.1.1/api/python/reference/api/pyspark.sql.functions.format_string.html

- https://spark.apache.org/docs/3.1.1/api/python/reference/api/pyspark.sql.functions.lpad.html

0 Comments