Data Processing and Validation with Apache Spark: How to Find if a Spark DataFrame is Empty or Non Empty ?

As a data scientist or data engineer, you may often come across the need to check if a Spark DataFrame is empty or not. This is an important step in data validation and manipulation, as it helps ensure that your data processing and analysis is accurate and reliable. In this post, we'll explore some techniques to find if a Spark DataFrame is empty or not and understand the methods available in Apache Spark to check and validate the given Spark DataFrame is Empty Dataframe or Non Empty Dataframe.

This type of scenario based questions, we could have often encounter in Apache Spark interviews and also in real time we would have came across these type of Data Validation scenarios to check for the availability of data in Spark DF after transformations.

In this tutorial, we use Databricks community edition to explore this use case.

Use Case:

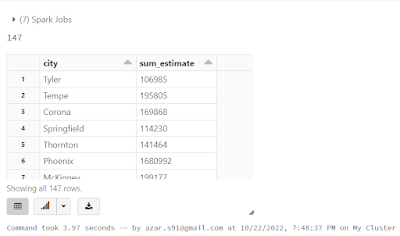

Consider a sample dataset of population. We will read the file and perform some transformation as shown in the below.

Sample Dataset:

Dataset, can be downloaded from the below git link

Question:

Consider we did some transformation to the input file after reading as dataframe as shown below.

#import libraries

from pyspark.sql.functions import sum

#input location

file_location="/FileStore/tables/uspopulation.csv"

#Read input file

in_data=(

spark.read

.option("header",True)

.option("inferSchema",True)

.option("delimiter",'|')

.csv(file_location)

)

#Apply Transformation logic

group_data=(

in_data.filter("state_code!='NY'")

.groupby("city")

.agg(sum("2019_estimate").alias("sum_estimate"))

)

#Display outs

print(group_data.count())

group_data.display()

Out[]:

- What are the Ways available to check Spark DataFrame group_data is empty or not ?

Solutions:

We have several methods to check Spark DF is Empty, Lets go through each method one by one.

Understand Better with Video Explanation:

Do Subscribe to my YouTube channel for more videos on Apache Spark,

Method 1 - Use count() Function:

Simply use count() function, which returns the number of records from each partition aggregated. If the count is zero, then the DataFrame is empty.

Here's the code snippet to illustrate this:

#print count of group_data

print(group_data.count())

#if else block

if group_data.count() > 0:

print("True")

else:

print("False")

Out[]:

Note: Based on the volume of data and the type of transformation we perform, count() action may take time to complete

Method 2 - Use isEmpty() Function:

Next method is to use isEmpty() function on top of Spark DF. The isEmpty() function returns a boolean value indicating whether the DataFrame is empty or not.

Here's code snippet to illustrate this:

Syntax

group_data.rdd.isEmpty()

Out[]:

False

Note: isEmpty function cannot be used on top of DF, we need to convert Spark DF into Spark RDD and apply isEmpty() as shown in above syntax

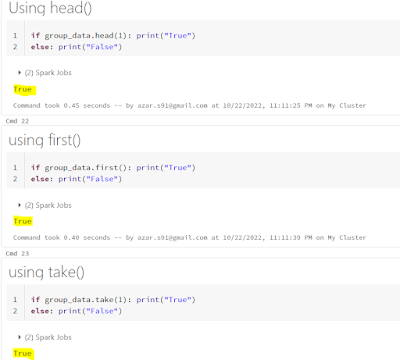

Method 3 - Use head() or first() or take():

#Using head()

if group_data.head(1): print("True")

else: print("False")

#using first()

if group_data.first(): print("True")

else: print("False")

#using take()

if group_data.take(1): print("True")

else: print("False")

Out[]:

In summary, checking if a Spark DataFrame is empty or not is an important step in data validation and manipulation. With any of the above method we can find whether the give Spark DF is empty or not. With these techniques, you can ensure that your data validation and manipulation is accurate and reliable, and avoid potential errors in your data analysis.

Happy Learning

0 Comments